Yolo v3已過時了,本篇只是記錄而以。請參照 v4 or v7 之說明

安裝套件

pip install tensorflow matplotlib Pillow opencv-python opencv-contrib-python

安裝YOLOV3

YOLO只是一個演算模型,此模型只說明第一步用啥演算法,第二步…..。這些演算法全部放在 yolov3.cfg 這個檔案裏面。所以不算是一個sdk。

不過YOLO提供了一些函數方便我們的app可以調用,這些函數放在yolo.py檔安及yolo3目錄中。官網提供的這些函數都是採用 Tensorflow v1的代碼,無法在 Tensorflow v2的版本執行。本人已將官網的代碼全部轉成v2的版本,且可以直接在pycharm之下直接執行,載點如下

yolo3_for_tf2 <==todo

請將yolo3_for_tf2.zip解開,將裏面的檔案及代碼 copy到專案之下,裏面有font, yolo3目錄及 settings.py convert.py yolov3.cfg yolo.py…..

下載權重

權重,其實就是訓練後的模型資料,這些資料也就是日後偵測新物件時要載入的模型。官網有事先訓練完成的模型,可辨識80種物件,下載網址如下。請將下載後的yolov3.weights檔案 copy 到專案之下即可。

wget https://pjreddie.com/media/files/yolov3.weights

轉換權重

YOLO 官網預訓練的權重為yolo格式,但我們目前是使用 tensorflow .keras,所以需使用如下代碼,將權重轉換成 .h5的keras格式。

請注意, .h5這個權重檔案,就是等會要偵測的模型,而且也是日後要訓練自已模型的重要依據,絕對不能刪除

h5權重轉換.py

#! /usr/bin/env python

import configparser

import io

from collections import defaultdict

import numpy as np

import tensorflow.keras.backend as K

from tensorflow.keras.layers import (Conv2D, Input, ZeroPadding2D, Add,

UpSampling2D, MaxPooling2D, Concatenate)

from tensorflow.keras.layers import LeakyReLU

from tensorflow.keras.layers import BatchNormalization

from tensorflow.keras.models import Model

from tensorflow.keras.regularizers import l2

config_path='yolov3.cfg'

weights_path='yolov3.weights'

output_path='model_data/yolo.h5'

def unique_config_sections(config_file):

section_counters = defaultdict(int)

output_stream = io.StringIO()

with open(config_file) as fin:

for line in fin:

if line.startswith('['):

section = line.strip().strip('[]')

_section = section + '_' + str(section_counters[section])

section_counters[section] += 1

line = line.replace(section, _section)

output_stream.write(line)

output_stream.seek(0)

return output_stream

def main():

# Load weights and config.

print('Loading weights.')

weights_file = open(weights_path, 'rb')

major, minor, revision = np.ndarray(

shape=(3,), dtype='int32', buffer=weights_file.read(12))

if (major * 10 + minor) >= 2 and major < 1000 and minor < 1000:

seen = np.ndarray(shape=(1,), dtype='int64', buffer=weights_file.read(8))

else:

seen = np.ndarray(shape=(1,), dtype='int32', buffer=weights_file.read(4))

print('Weights Header: ', major, minor, revision, seen)

print('Parsing Darknet config.')

unique_config_file = unique_config_sections(config_path)

cfg_parser = configparser.ConfigParser()

cfg_parser.read_file(unique_config_file)

print('Creating Keras model.')

input_layer = Input(shape=(None, None, 3))

prev_layer = input_layer

all_layers = []

weight_decay = float(cfg_parser['net_0']['decay']

) if 'net_0' in cfg_parser.sections() else 5e-4

count = 0

out_index = []

for section in cfg_parser.sections():

print('Parsing section {}'.format(section))

if section.startswith('convolutional'):

filters = int(cfg_parser[section]['filters'])

size = int(cfg_parser[section]['size'])

stride = int(cfg_parser[section]['stride'])

pad = int(cfg_parser[section]['pad'])

activation = cfg_parser[section]['activation']

batch_normalize = 'batch_normalize' in cfg_parser[section]

padding = 'same' if pad == 1 and stride == 1 else 'valid'

# Setting weights.

# Darknet serializes convolutional weights as:

# [bias/beta, [gamma, mean, variance], conv_weights]

prev_layer_shape = K.int_shape(prev_layer)

weights_shape = (size, size, prev_layer_shape[-1], filters)

darknet_w_shape = (filters, weights_shape[2], size, size)

weights_size = np.product(weights_shape)

print('conv2d', 'bn'

if batch_normalize else ' ', activation, weights_shape)

conv_bias = np.ndarray(

shape=(filters,),

dtype='float32',

buffer=weights_file.read(filters * 4))

count += filters

if batch_normalize:

bn_weights = np.ndarray(

shape=(3, filters),

dtype='float32',

buffer=weights_file.read(filters * 12))

count += 3 * filters

bn_weight_list = [

bn_weights[0], # scale gamma

conv_bias, # shift beta

bn_weights[1], # running mean

bn_weights[2] # running var

]

conv_weights = np.ndarray(

shape=darknet_w_shape,

dtype='float32',

buffer=weights_file.read(weights_size * 4))

count += weights_size

# DarkNet conv_weights are serialized Caffe-style:

# (out_dim, in_dim, height, width)

# We would like to set these to Tensorflow order:

# (height, width, in_dim, out_dim)

conv_weights = np.transpose(conv_weights, [2, 3, 1, 0])

conv_weights = [conv_weights] if batch_normalize else [

conv_weights, conv_bias

]

# Handle activation.

act_fn = None

if activation == 'leaky':

pass # Add advanced activation later.

elif activation != 'linear':

raise ValueError(

'Unknown activation function `{}` in section {}'.format(

activation, section))

# Create Conv2D layer

if stride > 1:

# Darknet uses left and top padding instead of 'same' mode

prev_layer = ZeroPadding2D(((1, 0), (1, 0)))(prev_layer)

conv_layer = (Conv2D(

filters, (size, size),

strides=(stride, stride),

kernel_regularizer=l2(weight_decay),

use_bias=not batch_normalize,

weights=conv_weights,

activation=act_fn,

padding=padding))(prev_layer)

if batch_normalize:

conv_layer = (BatchNormalization(

weights=bn_weight_list))(conv_layer)

prev_layer = conv_layer

if activation == 'linear':

all_layers.append(prev_layer)

elif activation == 'leaky':

act_layer = LeakyReLU(alpha=0.1)(prev_layer)

prev_layer = act_layer

all_layers.append(act_layer)

elif section.startswith('route'):

ids = [int(i) for i in cfg_parser[section]['layers'].split(',')]

layers = [all_layers[i] for i in ids]

if len(layers) > 1:

print('Concatenating route layers:', layers)

concatenate_layer = Concatenate()(layers)

all_layers.append(concatenate_layer)

prev_layer = concatenate_layer

else:

skip_layer = layers[0] # only one layer to route

all_layers.append(skip_layer)

prev_layer = skip_layer

elif section.startswith('maxpool'):

size = int(cfg_parser[section]['size'])

stride = int(cfg_parser[section]['stride'])

all_layers.append(

MaxPooling2D(

pool_size=(size, size),

strides=(stride, stride),

padding='same')(prev_layer))

prev_layer = all_layers[-1]

elif section.startswith('shortcut'):

index = int(cfg_parser[section]['from'])

activation = cfg_parser[section]['activation']

assert activation == 'linear', 'Only linear activation supported.'

all_layers.append(Add()([all_layers[index], prev_layer]))

prev_layer = all_layers[-1]

elif section.startswith('upsample'):

stride = int(cfg_parser[section]['stride'])

assert stride == 2, 'Only stride=2 supported.'

all_layers.append(UpSampling2D(stride)(prev_layer))

prev_layer = all_layers[-1]

elif section.startswith('yolo'):

out_index.append(len(all_layers) - 1)

all_layers.append(None)

prev_layer = all_layers[-1]

elif section.startswith('net'):

pass

else:

raise ValueError(

'Unsupported section header type: {}'.format(section))

# Create and save model.

if len(out_index) == 0: out_index.append(len(all_layers) - 1)

model = Model(inputs=input_layer, outputs=[all_layers[i] for i in out_index])

print(model.summary())

model.save('{}'.format(output_path))

print('Saved Keras model to {}'.format(output_path))

# Check to see if all weights have been read.

remaining_weights = len(weights_file.read()) / 4

weights_file.close()

print('Read {} of {} from Darknet weights.'.format(count, count +

remaining_weights))

if remaining_weights > 0:

print('Warning: {} unused weights'.format(remaining_weights))

if __name__ == '__main__':

main()

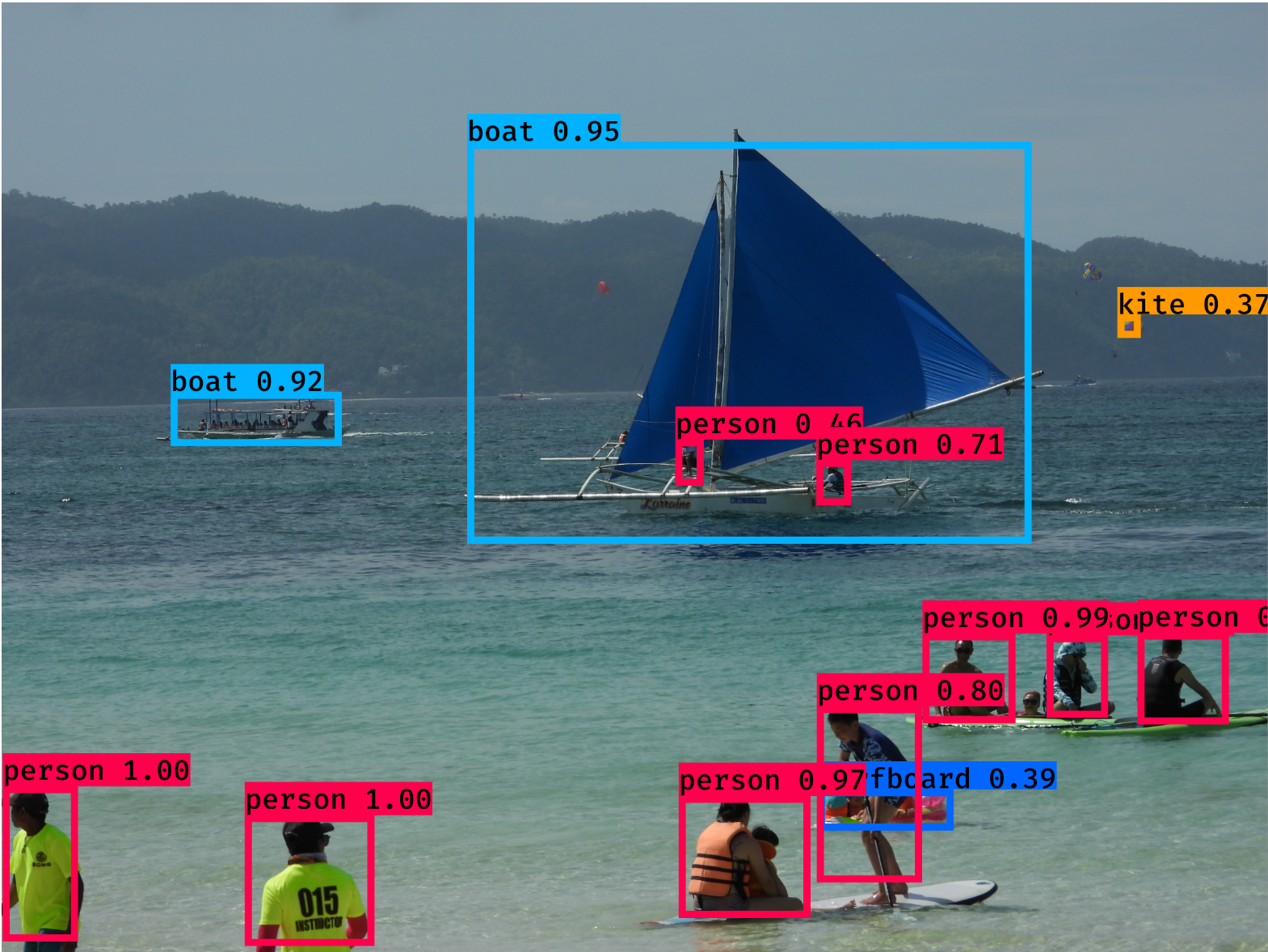

偵測圖片

模型(.cfg)有了,訓練後的資料(權重)也有了,接下來就可以開始偵測新圖片了

from yolo import YOLO

from PIL import Image

if __name__ == '__main__':

yolo=YOLO()

image=Image.open('demo.jpg')

yolo.detect_image(image).show()

訓練模型

若想訓練自已的模型,偵測自已想要的種類,就必需繼續下面的操作。首先,檢視一下,專案之下的 model_data 目錄是否有如下檔案

├─ 專案 │ ├─ model_data

│ │ ├─ xml

│ │ │ ├─ a.xml檔案

│ │ │ ├─ b.xml檔案

│ │ │ ├─ .....xml檔案 │ │ ├─ my_class.txt

│ │ ├─ yolo_anchors.txt

│ │ ├─ yola_weights.h5 │ ├─ settings.py

│ ├─ 訓練模型.py

│ ├─ 偵測.py

xml

xml 目錄下,包含了所有由labelImg所標示的xml檔。

my_class.txt

包含要訓練的種類,每個種類都需換行,檔案內容如下

cat

dog

bird

yolo_anchors.txt

是訓練時要使用的filter大小,共有9組,內容如下,請不要任意變更

10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

yolo_weights.h5

此檔案就是上述所說明,由官網下載的 yolo_weights,再經由 h5權重轉換.py 產生的 .h5檔。此檔作為接續訓練的 “預前預訓練檔”,一定要留著。

訓練模型.py

本程式已簡化官網的步驟,不需要轉換voc啦,分割train/test啦,也不需要將原始圖檔copy到專案。

本程式很簡單,只要把所有由 labelImg 產生的xml檔,全部copy到model_data/xml之下即可,其它的事都不用作。

訓練完成後,會自動在model_data目錄下產生trained_model_final.h5這個權重模型,此檔就可以用來偵測自已的圖片了。

那麼原始圖檔為什麼不用copy到專案呢? 因為xml裏就記載原始圖檔的目錄位置,訓練代碼自動會去抓,所以不要把原始圖檔砍掉就好.

代碼中,請依自已的顯卡記憶体調整 batch_size。記憶体愈小,batch_size就要調愈小。本人的nVidia RTX3080Ti 顯卡內存為12G, batch_size = 12 為最佳數值。

import numpy as np

import tensorflow.keras.backend as K

from tensorflow.keras.callbacks import TensorBoard, ModelCheckpoint, ReduceLROnPlateau, EarlyStopping

from tensorflow.keras.layers import Input, Lambda

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from yolo3.model import preprocess_true_boxes, yolo_body, tiny_yolo_body, yolo_loss

from yolo3.utils import get_random_data

import os

import xml.etree.ElementTree as ET

import shutil

classes = ["cat","dog", "bird"] #定義自訂義類別名稱

batch_size = 12

def convert_annotation(file):

in_file = open('model_data/xml/%s.xml' %file, encoding='utf-8') #指定標註檔路徑

tree=ET.parse(in_file)

root = tree.getroot()

line=root.find('path').text

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult)==1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (int(xmlbox.find('xmin').text), int(xmlbox.find('ymin').text), int(xmlbox.find('xmax').text), int(xmlbox.find('ymax').text))

line+=" " + ",".join([str(a) for a in b]) + ',' + str(cls_id)

return line

def get_classes(classes_path):

'''loads the classes'''

with open(classes_path) as f:

class_names = f.readlines()

class_names = [c.strip() for c in class_names]

return class_names

def get_anchors(anchors_path):

'''loads the anchors from a file'''

with open(anchors_path) as f:

anchors = f.readline()

anchors = [float(x) for x in anchors.split(',')]

return np.array(anchors).reshape(-1, 2)

def create_model(input_shape, anchors, num_classes, load_pretrained=True, freeze_body=2,

weights_path='model_data/yolo_weights.h5'):

'''create the training model'''

K.clear_session() # get a new session

image_input = Input(shape=(None, None, 3))

h, w = input_shape

num_anchors = len(anchors)

y_true = [Input(shape=(h // {0: 32, 1: 16, 2: 8}[l], w // {0: 32, 1: 16, 2: 8}[l], \

num_anchors // 3, num_classes + 5)) for l in range(3)]

model_body = yolo_body(image_input, num_anchors // 3, num_classes)

print('Create YOLOv3 model with {} anchors and {} classes.'.format(num_anchors, num_classes))

if load_pretrained:

model_body.load_weights(weights_path, by_name=True, skip_mismatch=True)

print('Load weights {}.'.format(weights_path))

if freeze_body in [1, 2]:

# Freeze darknet53 body or freeze all but 3 output layers.

num = (185, len(model_body.layers) - 3)[freeze_body - 1]

for i in range(num): model_body.layers[i].trainable = False

print('Freeze the first {} layers of total {} layers.'.format(num, len(model_body.layers)))

model_loss = Lambda(yolo_loss, output_shape=(1,), name='yolo_loss',

arguments={'anchors': anchors, 'num_classes': num_classes, 'ignore_thresh': 0.5})(

[*model_body.output, *y_true])

model = Model([model_body.input, *y_true], model_loss)

return model

def create_tiny_model(input_shape, anchors, num_classes, load_pretrained=True, freeze_body=2,

weights_path='model_data/tiny_yolo_weights.h5'):

'''create the training model, for Tiny YOLOv3'''

K.clear_session() # get a new session

image_input = Input(shape=(None, None, 3))

h, w = input_shape

num_anchors = len(anchors)

y_true = [Input(shape=(h // {0: 32, 1: 16}[l], w // {0: 32, 1: 16}[l], \

num_anchors // 2, num_classes + 5)) for l in range(2)]

model_body = tiny_yolo_body(image_input, num_anchors // 2, num_classes)

print('Create Tiny YOLOv3 model with {} anchors and {} classes.'.format(num_anchors, num_classes))

if load_pretrained:

model_body.load_weights(weights_path, by_name=True, skip_mismatch=True)

print('Load weights {}.'.format(weights_path))

if freeze_body in [1, 2]:

# Freeze the darknet body or freeze all but 2 output layers.

num = (20, len(model_body.layers) - 2)[freeze_body - 1]

for i in range(num): model_body.layers[i].trainable = False

print('Freeze the first {} layers of total {} layers.'.format(num, len(model_body.layers)))

model_loss = Lambda(yolo_loss, output_shape=(1,), name='yolo_loss',

arguments={'anchors': anchors, 'num_classes': num_classes, 'ignore_thresh': 0.7})(

[*model_body.output, *y_true])

model = Model([model_body.input, *y_true], model_loss)

return model

def data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes):

'''data generator for fit_generator'''

n = len(annotation_lines)

i = 0

while True:

image_data = []

box_data = []

for b in range(batch_size):

if i == 0:

np.random.shuffle(annotation_lines)

image, box = get_random_data(annotation_lines[i], input_shape, random=True)

image_data.append(image)

box_data.append(box)

i = (i + 1) % n

image_data = np.array(image_data)

box_data = np.array(box_data)

y_true = preprocess_true_boxes(box_data, input_shape, anchors, num_classes)

yield [image_data, *y_true], np.zeros(batch_size)

def data_generator_wrapper(annotation_lines, batch_size, input_shape, anchors, num_classes):

n = len(annotation_lines)

if n == 0 or batch_size <= 0: return None

return data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes)

if __name__ == '__main__':

shutil.rmtree('logs')

#annotation_path = 'train.txt' # 待訓練清單(YOLO格式)

log_dir = 'logs/000/' # 訓練過程及結果暫存路徑

classes_path = 'model_data/road_classes.txt' # 自定義標籤檔路徑及名稱

anchors_path = 'model_data/yolo_anchors.txt' # 錨點定義檔路徑及名稱

class_names = get_classes(classes_path)

num_classes = len(class_names)

anchors = get_anchors(anchors_path)

input_shape = (416, 416) # multiple of 32, hw 預設輸入影像尺寸須為32的倍數(寬,高)

is_tiny_version = len(anchors) == 6 # default setting

if is_tiny_version:

model = create_tiny_model(input_shape, anchors, num_classes,

freeze_body=2, weights_path='model_data/tiny_yolo_weights.h5')

else:

model = create_model(input_shape, anchors, num_classes,

freeze_body=2, weights_path='model_data/yolo_weights.h5') # 指定起始訓練權重檔路徑及名稱

logging = TensorBoard(log_dir=log_dir)

checkpoint = ModelCheckpoint(log_dir + 'ep{epoch:03d}-loss{loss:.3f}-val_loss{val_loss:.3f}.h5',

monitor='val_loss', save_weights_only=True, save_best_only=True,

period=3) # 訓練過程權重檔名稱由第幾輪加上損失率為名稱

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.1, patience=3, verbose=1)

early_stopping = EarlyStopping(monitor='val_loss', min_delta=0, patience=10, verbose=1)

val_split = 0.1

files = os.listdir('model_data/xml')

lines = []

for file in files:

lines.append(convert_annotation(file.split('.')[0]))

np.random.seed(10101)

np.random.shuffle(lines)

np.random.seed(None)

num_val = int(len(lines) * val_split)

num_train = len(lines) - num_val

# Train with frozen layers first, to get a stable loss.

# Adjust num epochs to your dataset. This step is enough to obtain a not bad model.

if True:

model.compile(optimizer=Adam(lr=1e-3), loss={

# use custom yolo_loss Lambda layer.

'yolo_loss': lambda y_true, y_pred: y_pred})

print('Train on {} samples, val on {} samples, with batch size {}.'.format(num_train, num_val, batch_size))

model.fit_generator(data_generator_wrapper(lines[:num_train], batch_size, input_shape, anchors, num_classes),

steps_per_epoch=max(1, num_train // batch_size),

validation_data=data_generator_wrapper(lines[num_train:], batch_size, input_shape, anchors,

num_classes),

validation_steps=max(1, num_val // batch_size),

epochs=50, # 訓練遍歷次數

initial_epoch=0, # 初始訓練遍歷次數

callbacks=[logging, checkpoint])

model.save_weights(log_dir + 'trained_weights_stage_1.h5') # 儲存臨時權重檔案名稱

# 解凍並繼續訓練以進行微調

# 如果效果不好則訓練更長時間

if True:

for i in range(len(model.layers)):

model.layers[i].trainable = True

model.compile(optimizer=Adam(lr=1e-4),

loss={'yolo_loss': lambda y_true, y_pred: y_pred}) # recompile to apply the change

print('Unfreeze all of the layers.')

print('Train on {} samples, val on {} samples, with batch size {}.'.format(num_train, num_val, batch_size))

model.fit_generator(data_generator_wrapper(lines[:num_train], batch_size, input_shape, anchors, num_classes),

steps_per_epoch=max(1, num_train // batch_size),

validation_data=data_generator_wrapper(lines[num_train:], batch_size, input_shape, anchors,

num_classes),

validation_steps=max(1, num_val // batch_size),

epochs=100, # 訓練遍歷次數

initial_epoch=50, # 初始訓練遍歷次數

callbacks=[logging, checkpoint, reduce_lr, early_stopping])

model.save_weights(log_dir + 'trained_weights_final.h5') # 儲存最終權重檔

model.save(log_dir + 'trained_model_final.h5') #儲存完整模型及權重檔

os.rename('logs/000/trained_model_final.h5', 'model_data/trained_model_final.h5')

# Further training if needed.

todo

參考 :

https://blog.csdn.net/aaronjny/article/details/103658254